The Sistine Chapel Problem

In a time when Large Language Models (LLM) can write seemingly beautiful essays and solve hard coding problems in seconds, educators have to ask themselves a basic question: what is the most important thing about learning? The answer, which may surprise, can be found in a park bench conversation from a 1997 movie.

When we use an LLM to complete a task, whether it's coding, or exploring a complex problem, we receive what appears to be a perfect product: well-structured, grammatically correct, and convincing. But this perfection masks an absence. We haven't wrestled with confused thoughts, haven't experienced the frustration of contradictions and debugging that won't resolve, haven't felt the satisfaction of finally understanding something that once seemed impenetrable.

Growth has always been imperfect by design. The crossed-out sentences, the failed attempts running code, the flash of insight amid confusion. These are not bugs in the process of thinking but features. They represent understanding being forged rather than downloaded, knowledge earned rather than accessed. This tension between information and experience, between knowing about something and knowing it, predates artificial intelligence. But what if the greatest threat isn't that we work with AI, but that we'll risk the capacity for original thought? How will we cultivate innovation and deep expertise if we, and especially so students, never experience the disorientation of not knowing? When every difficult question yields an instant, polished answer, who will develop the intellectual muscle to tackle problems that have no solutions yet? The struggle itself, the messy, frustrating, ultimately transformative process of grappling with complexity, may be where human capability actually lives: not in the answers we produce, but in the cognitive strength we build by searching for them.

In my view, a more than twenty-year-old film about an intellectually talented janitor from South Boston offers an unexpected lens for understanding what we're about to lose.

What It Smells Like in the Sistine Chapel

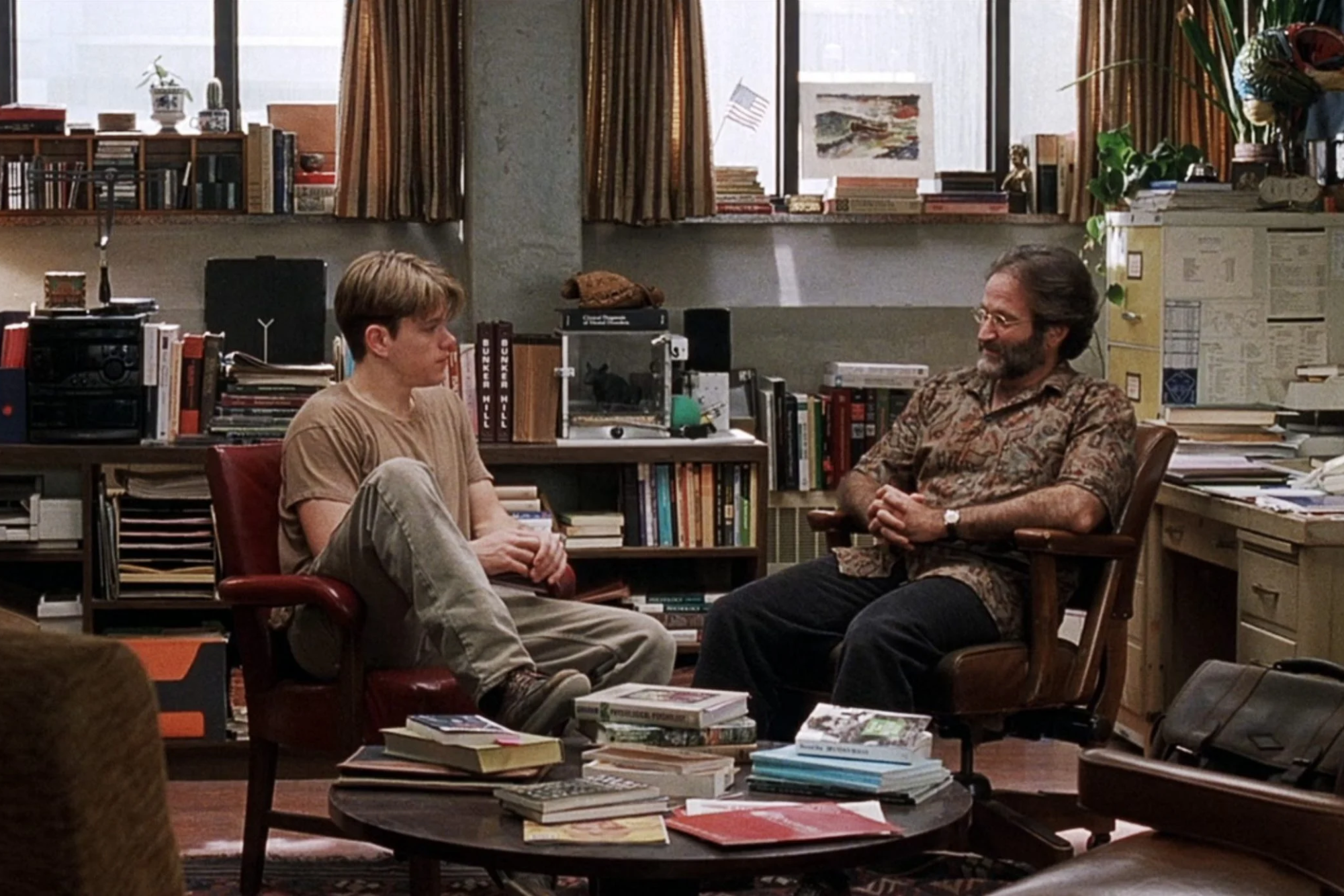

In Good Will Hunting, psychologist Sean Maguire (Robin Williams) confronts Will Hunting (Matt Damon), a brilliant but emotionally guarded young janitor who can recite facts about virtually anything, with a profound challenge about the nature of knowledge:

"If I asked you about art, you'd probably give me the skinny on every art book ever written. Michelangelo, you know a lot about him. Life's work, political aspirations, him and the pope, sexual orientations, the whole works, right? But I'll bet you can't tell me what it smells like in the Sistine Chapel. You've never actually stood there and looked up at that beautiful ceiling."

This scene makes clear a difference that has become very important in our time. Large Language Models, like Will in a the famous Harvard bar scene at the inception of the plot, can provide the surface on any topic: dates, facts, connections, analysis. They can tell you about Michelangelo's techniques, the chapel's dimensions, the theological symbolism of each panel. But they cannot tell you what it smells like in that sacred space, the trace of incense from morning mass, how your neck aches from craning upward, or the short hush that falls over tourists as they enter the chapel. They did not experience the occasional gasp when someone spots Adam's finger. They cannot convey how small you feel standing beneath the human-made frescoes.

The "How" Versus the "Why"

Despite large advances in neuroscience-inspired machine learning, and evolutionary algorithms that taught computers to fail via reinforcement learning, failure taught them optimization, not the weight of disappointment, not the frustration of learning. Philosophers call this the explanatory gap (Levine, 1983), or Dasein (Heidegger, 1927); they process signals but never cross into sensation.

Without doubt, computers excel at the mechanics of knowledge: the what and how. They can demonstrate problem-solving steps, generate structurally sound arguments, and synthesize information from many databases it crawled or is able to access ad-hoc. This is undeniably valuable for education. Students can receive instant feedback, explore ideas at their own pace, and access personalized tutoring, complementing what we for instance do in problem based learning. Yet education, at its core, has always been about the why. Why does this mathematical proof matter? Why should we care about the fall of Rome? Why does this poem move us? Why do I control for collinearity with the Variance Inflation Factor (or why should I not?). These questions require not just information processing but understanding. The kind of insight that emerges from struggle, contemplation, and failure.

One of my undergraduate professors for economics and philosophy, Birger Priddat, once started the first lecture with a claim that followed us: "By the end of your studies, you should have read a stable of books at least taller than yourself. You need to experience it, you need to doubt it, at the risk of being wrong". When Sean challenges Will about the Sistine Chapel, Sean is making a similar point: there is knowledge you can access and knowledge you must undergo. The stack of books taller than yourself is not about the information contained within their pages, which any LLM could summarize in seconds. It's about becoming someone different by the time you reach the top: tired, changed, and carrying not just what you've read but who you've become through reading it. Something subtle is lost in the efficiency stratospheric scholarship, flying at 30,000 feet above the actual methodologica and theoretical terrain: the deep, sometimes frustrating engagement with a paper's methodology, sampling, the wrestling with its limitations, the slow dawning of what it really means in context. In thesis defenses, this trade-off becomes visible: students have genuinely worked hard, used the tools available to them, yet find themselves strangers to their own citations. It's not a failure of effort but a consequence of tools that make the wrong parts easy.

A recent MIT study that went viral claimed to show measurable IQ drops in 18-39 year old who regularly used GPT for problem-solving tasks, sparking a debate about AI's cognitive impacts. While the study's methodology and sample size invite legitimate skepticism, its central concern reflects well-established neuroscience. Research on neuroplasticity demonstrates that effortful learning, or what Bjork & Bjork (2011) term desirable difficulties, creates more robust neural pathways than passive reception of information. When we struggle with a problem, our brains form denser synaptic connections through a process neuroscientists call long-term potentiation. Problem-solving engages deeper memory consolidation in the hippocampus and prefrontal cortex. fMRI studies show markedly different brain activation patterns when individuals visually engage, for instance via handwriting.

Your Move, Chief

In the movie, Sean continues his challenge to Will:

You're an orphan right? You think I know the first thing about how hard your life has been, how you feel, who you are, because I read Oliver Twist?

Here's another realisation: genuine knowledge is individualised and influenced by our individual experiences with other people. The bench scene serves as a reminder that transformative education is also relational, which is perhaps its most significant lesson. Sean's breakthrough with Will isn't the result of superior knowledge or clever reasoning. It comes from being vulnerable, from sharing his own grief over his wife's passing, and from having a real human connection. "Your move," he says, asking Will to engage authentically rather than simply reciting facts.

This relational dimension, the classroom discussions where ideas spark and clash, the mentorship that shapes not just what we know but who we become, cannot be algorithmatically reproduced. Also from the educator's perspective, an LLM can provide feedback, but it cannot see the particular student before it, the thought vector one comes from, cannot recognize the moment when confusion shifts to comprehension, cannot share in the joy of discovery.

"Desirable Difficulties"

The idea is not to banish LLMs from education. They are powerful and tools that, used thoughtfully, can enhance learning. Students can use them to explore ideas, check understanding, and overcome barriers to entry in complex subjects. In my own entrepreneurship teaching, I encourage students to use text, image, and video generation methods to imagine future products and services. In causal inference, I employ LLM to look for potential confounders or omitted variables. That being said, we must resist the temptation to mistake the tool, a cognitive limb, for the purpose.

The key lies in preserving what cognitive scientists call desirable difficulties: the productive friction that actually enables learning. Possibly through written exams, to take a recent case in point from Jure Leskovec, Professor for Computer Science at Stanford University, one of the epicenters of LLM research. When LLMs remove all resistance from the educational process, they may inadvertently remove the very mechanisms through which understanding develops. It's the cognitive equivalent of using a GPS for every journey: efficient, certainly, but we never develop our own sense of direction. The job market might pay a premium for that very skill of being able to read a map.

Now, the question is how might we design friction back into AI-assisted learning? Most recently, syllabi suggest students could use LLMs to explore multiple perspectives on a topic but be required to defend their own synthesis without algorithmic help. Or they might employ AI to generate counterarguments to their thesis, forcing them to strengthen their reasoning. The most promising educational applications of AI might be those that increase rather than decrease meaningful cognitive friction, by design. Imagine an LLM that refuses to answer directly but instead guides students through productive confusion, or one that generates problems tailored to sit just beyond a student's current ability, in what Vygotsky called the zone of proximal development. Or they could use LLMs as sophisticated tutors that ask Socratic questions, rather than answers, maintaining the struggle that builds intellectual muscle.

The Experience Game

As we integrate artificial intelligence into our educational systems, we might recall that moment on the bench when Sean tells Will about his late wife: "Those are the things I miss the most. The little idiosyncrasies that only I know about. That's what made her my wife." His point isn't about love, it's about the irreplaceable nature of lived experience. Those idiosyncrasies couldn't be summarized or transferred; they had to be discovered through years of attention, presence, and engagement.

Ironically, while modern LLMs might pass Turing's Imitation Game through text, they fail what we might call the 'Sistine Chapel Test', not whether they can describe experience convincingly, but whether they've had experience at all. Turing proposed to consider the question, "Can machines think?." Sean asks us whether knowledge without experience is knowledge at all.